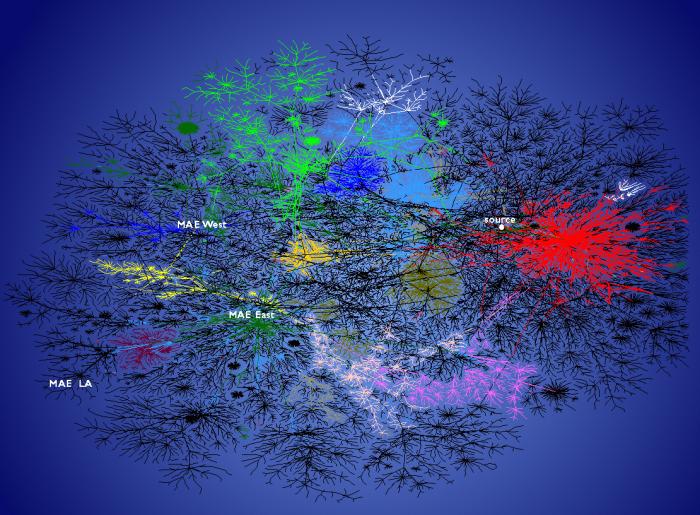

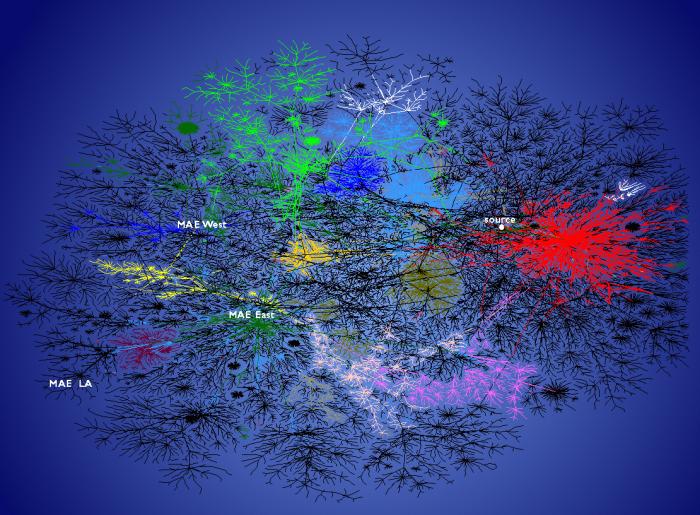

Diagram of Internet connections, showing the major Metropolitan Area Exchanges (MAE), by K.C. Claffy, republished on Albert-László Barabási's Self-Organized Networks Gallery web page.

Linked: The New Science of Networks

by Albert-László Barabási

Perseus Publishing, April 2002, Hard cover, 229 pgs, plus notes and index

Review score: *** out of *****

When I originally started this review of Albert-László Barabási's book Linked: the new science of networks, I intended to wrote an extended review that would not only review the book, but include more detail from the papers published by Barabási's research group. Research papers sometimes have an addictive quality, like some kind of narcotic. One paper leads to another, soon you're not as sure of your surroundings as you were when you started. The topic of networks has grown and changed as I've read more. This review has taken much more time and turned out much longer than I intended. I originally hoped for a smooth melding of the book review and the discussion of the more advanced research material. I was unable to forge this melding. This book review is divided into two parts: a brief review of the book Linked and a discussion of the ideas in the book and relative articles.

Albert-László Barabási's book Linked: the new science of networks is a popular science book on the research that he and his group at University of Notre Dame (in Indiana) have been doing on networks (or network abstractions). Networks include actual physical networks, like the TCP/IP routers that make up the physical substrate of the Internet and the World Wide Web. Networks also provide a powerful abstraction for viewing the world around us. Some of these are familiar from everyday discourse. In the bad old days of the cold war there were espionage networks. As the cold war died out, focus shifted to illegal narcotics networks. As terrorist events were targeted at United States assets and territory, focus has shifted again to terrorist networks, the most famous being al-Qaeda, founded by Osama bin Laden in a fusion with the Egyptian Islamic Jihad.

In Linked, Barabási includes a number of less nefarious examples of networks in the world around us. Perhaps the most famous of these is the network formed by people we know, that has been described as "six degrees of separation". Here each "degree of separation" is someone who knows someone. For example, I might be separated by "three degrees" from President Putin of Russia via my friend Mike, who knows someone who knows someone else who used to know Putin in Saint Petersburg (known then as Leningrad). Barabási's also gives examples of networks formed by actors who appeared together in movies and by the co-authors of journal articles. Barabási also looked at synthetic networks, like the networks of connections between logic gates in a VLSI microprocessor. The most interesting idea introduced by Prof. Barabási is that the connectivity of these networks follows a power law, where a fraction of the nodes have many connections and others have just a few connections.

Linked is written for a general audience. Although there are equations in some of the footnotes, Prof. Barabási generally avoids mathematics. The book provides an easy, readable introduction to the properties of networks and how they can serve as a powerful abstraction in many areas. There were a few times when I found that Linked dragged. On occasion the stories and examples meandered around a few core ideas. For those who want a general technical discussion of the ideas in Linked without the narrative account describing networks and the adventures of Prof. Barabási's research group, see The physics of the Web by Albert-László Barabási, PhysicsWeb, July 2001.

In the introduction to Linked Prof. Barabási writes "This book has a simple aim: to get you to think about networks". At least in my case, Prof. Barabási succeeded in this goal. Reading this book prompted me to start reading a wider body of literature on networks and network abstractions.

A popular book of this kind necessarily provides an engaging, but one sided view of a topic. The complexity that might exist in a text book that surveyed the area is missing. Research group leads like Prof. Barabási are not only responsible for supervising their research group, but also for raising money to fund research. Many successful research group leads have some talent for self promotion, which helps in raising research money. As long as the self promotion does not get out of hand, this is probably a virtue in a group lead, not a fault. There are lots of voices out there and you have to get noticed. Books like Linked, which are accessibly written for a general audience, help build name recognition for the author. When it comes to seeking funding (especially corporate funding), name recognition helps. Perhaps this explains the motivation for expanding what would normally be a survey article into a book length work.

The study of power law connected networks, of the type discussed in linked, is relatively new. Most of the references start in the late 1990s and many of the references I have used as a basis for this discussion appeared this year (2002). The newness of network theory makes it exciting. The idea put forth in Linked and in the research papers from Prof. Barabási's research group that complex networks "in the wild" naturally settle into a characteristic scale-free structure is an attractive model. I think that many of us want to believe attractive models. Those that are true make up the most elegant and beautiful parts of science. But a model should not be accepted simply because it is elegant. A model should explain both the observed statistical behavior and the underlying factors that produced these statistics. The work of Prof. Barabási's group has received wide spread attention, even before Linked was published. While there is consensus that the link distribution of the networks under discussion have a power law distribution, there seems to be little agreement outside of this.

Drawing conclusions in such a new and rapidly evolving area of research, which currently seems to lack consensus, is a dangerous business. I am a tourist, visiting this field. The time I can visit is limited. I will be returning to other areas where I have work that I want to finish. In such a brief stay, I can hardly claim an extensive knowledge of the literature. My brief exposure to this field has shown me that it is more complicated than the picture presented in Linked. The rest of this web page consists of a deeper discussion of networks and network models, largely drawn from the research literature.

A network consists of nodes and links that connect the nodes. A network is usually fully connected: a path exists from a particular node to any other node (unconnected networks can be viewed as separate networks). In a network, effects flow across links, which may span multiple nodes. Networks provide a powerful abstraction for many processes, including the Internet and a variety of social organizations. Although a network may be a static object, like the network formed by journal article co-authors before the year 2002, networks are frequently viewed as growing and evolving structures.

Mathematicians refer to networks as graphs. A graph consists of edges (links) and vertices (nodes). In Linked, Prof. Barabási gives a brief historical overview of random graphs and the work of the Hungarian mathematicians Paul Erdös and Alfred Rényi. In random graphs each node is connected to a fixed number of other nodes in a random fashion. For example, in a random graph where each node has two links, there will be two neighbors randomly connected to each node.

The homogeneous connections (e.g., every node has n links) of a random Erdös-Rényi graph are rarely, if ever, found in networks "in the wild" (outside of the minds of mathematicians). Roads and airline routes are not evenly distributed between cities, but are concentrated heavily in big cities. Some people are more outgoing and have many more social connections than others. The research done by Albert-László Barabási's group and others shows many naturally occurring networks self organize into a hub-hased network. In a hub based network, a few nodes will have many connections while the majority of nodes will have only a few links.

Hub based networks have a high degree of interconnectivity, especially near the hub nodes. This contributes to the "small world" effect (this term comes from the feeling that "it is a small world" when we meet someone new who knows another person we know). The small world effect is not surprising when we consider a network topology that consists of some highly connected nodes. The path between any two nodes (say two people in a social network) is proportional to the connectivity. If, on average, the network has k links per node and N nodes, the average path will be logk( N ). In computer science examples of such highly connected graphs include B-trees which are used to support high speed database access of large volumes of data. In a B-tree, each node in a tree structure has multiple links to nodes farther down in the tree.

The regular structure of regular graphs, like B-trees, is of limited use in understanding the characteristics of a hub based network. In these networks, the path length will be determined by the distance a node is from a hub.

Many characteristics in fall into a "bell curve", or Gaussian distribution. Examples include the height and weight of animals, the daily return of a stock or the grades on a college exam. Since so many characteristics fall into a bell curve, it might be naively expected that the distribution of links in a naturally occurring network follow this pattern as well. One of the most fascinating things to be learned from reading Linked is that this is not the case. The distribution of links in a variety of networks follows a power law.

A power law distribution is simply a function like y = xz. Like the Gaussian distribution, power laws are found in many places in nature. In a developing embryo cellular growth initially follows an exponential power law that is approximately y = 2n. If, at every time step the number of cells doubles, this equation will tell us the number of cells at time step n. As Thomas Malthus pointed out, unconstrained population also follows a power law of exponential growth. The amount of data stored on the World Wide Web (WWW) can also be described by the exponential growth curve of a power law.

Power laws describe Brownian motion, which is sometimes also referred to as a random walk. The distance R covered by a particle undergoing random collisions is directly proportional to the square-root of time, T:

R = kT0.5

where k is a constant.

Many objects, like growing silver crystals or coast lines have fractal shapes. The power law describing a random walk can also be used to describe the characteristics of a fractal shapes. For example, one measure of the smoothness of a fractal shape is given by its fractal dimension, which is described by NrD. An example of a fractal dimension is the Hurst exponent, H, which has values in the range 0 < H < 1. A Hurst exponent of 0.5 is a random walk. A Hurst exponent less than 0.5 indicates a random process where increases are more likely to be followed by decreases (mean reversion). Random processes that follow a trend (increases are followed by increases, decreases are followed by decreases) will have a Hurst exponent greater than 0.5.

In the case of a power law network, the number of nodes with k links is N(k) ~ k-gamma. When Barabási and his colleagues looked at the statistics for incoming web page links (web pages are pointed to be other web pages) they found a value for gamma close to two (gamma = 2.1). For outgoing links (links from a web page to other web pages) they found a slightly larger value for gamma (gamma = 2.5).

The equation N(k) ~ k-2.1 can also be expressed as N(k) ~ 1/k2.1. This is an asintotic curve that approaches zero as k approaches infinity. A curve like this suggests that a network will have a few nodes with many links (hubs) and many more nodes with just a few links. The idea that networks "in the wild" follow this kind of power law structure seems to be widely accepted, although the processes that generate networks is a topic of active discussion.

Barabási and his colleagues refer to networks where the links fall into a power law distribution as scale-free. I have not found a clear concise dictionary style definition in their work for what they mean by this term. Apparently, scale refers to the degree of the node (the number of links it possesses), not the scale of the observation (for example, self-similar fractal shapes have the same statistical characteristics, at different observation scales). One paper used a somewhat recursive definition: The network whose degree distribution follows a power law is called a scale-free network. The best definition I've found comes from Scaling phenomena in the Internet: Critically examining criticality by Willinger et al, Proc. Nat. Acad. Sci, Feb 19, 2002.

Intuitively, the significance of the discovery is that the vertex degrees observed in the Internet AS graph are highly variable. In fact, such highly variable vertex degrees have been unheard of in the context of the traditional and well studied Erdös-Rényi-type random graph models or the more hierarchical graph structures that have been proposed as realistic topology models in the networking literature. In both of these cases, the vertex degree distribution tends to be sharply concentrated around some "typical" node degree (e.g., the mean of the distribution), with essentially negligible probability for encountering vertex degrees that deviate by, say, more than one order of magnitude from the mean. Because of the absence of any such "typical" node degrees in graphs that exhibit power-law vertex degree distributions, these power-law graphs are also called scale-free graphs.

Italics added in the above quote.

One of the ways a hub based network can be grown is through "preferential attachment". When a new node is added to a network, the links from the new node will have a higher likelihood of being attached to nodes that already have many links. For example, if the new node initially starts out with two links, these links will have a higher probability of being attached to nodes with many links. This does not mean that a link will not be attached to a node with only a few existing links, just that it is less probable.

Preferential attachment will favor older nodes, since they will have had an opportunity to collect links. One of the examples of a naturally occurring preferential attachment network in Linked is in networks formed from journal article citations. Early journal articles on a given topic more likely to be cited. Once cited, this material is more likely to be cited again in new articles, so original articles in a field have a higher likelihood of becoming hubs in a network of references.

Preferential attachment in web pages on the World Wide Web is reinforced by search tools like the Google search engine, which uses a ranking algorithm that is based on links:

PageRank relies on the uniquely democratic nature of the web by using its vast link structure as an indicator of an individual page's value. In essence, Google interprets a link from page A to page B as a vote, by page A, for page B. But, Google looks at more than the sheer volume of votes, or links a page receives; it also analyzes the page that casts the vote. Votes cast by pages that are themselves "important" weigh more heavily and help to make other pages "important."

A search using Google is more likely to find hub web pages that are pointed to by other web pages. The author of a new web page will then be more likely to add a link to this hub page. This behavior in networks, where hubs tend to be reinforced, is sometimes referred to as "the rich get richer.

Formation of hub based networks is not limited to a "rich get richer" growth rule. Hub based networks can also form on the basis of "fitness". In such a network, not all nodes have equal fitness. When a new node is added to the network, it will preferentially attach to nodes with a higher fitness value. There are many examples of networks that grow through fitness rules, where new nodes with high fitness form dominate hubs at the expense of existing nodes. For example, in the early days of the World Wide Web (1995), the AltaVista search engine, developed by Digital Equipment Corporation's Western Research Labs, was one of the most popular search engines. As a result, AltaVista was widely used and referenced (it became a hub). Three years later Google appeared on the scene with search technology that many believe is far superior to what AltaVista had to offer. If my experience and the experience of the people I know is any guide, Google has formed a dominate hub, although it appeared three years later than AltaVista.

As the example of Google and AltaVista show, network models based on fitness and competition can be dynamic. AltaVista did not disappear, it became a more minor node. Google has become the major node, but there are other competitors, like alltheweb.com. Just as Google displaced AltaVista, it is possible that a competitor with sufficiently strong advantages (fitness) could displace Google.

There is another network model proposed by Prof. Barabási's research group: winner take all. In this case a single node becomes so strong that it dominates the graph. In Linked (pg. 103-104) Barabási writes

Are there real networks that display true winner-takes-all behavior? We can now predict whether a given network will follow the fit-get-rich or winner-takes-all behavior by looking at its fitness distribution. Fitness, however, remains a somewhat elusive quantity, since the tools to precisely measure the fitness of an individual node are still being developed. But winner-takes-all behavior has such a singular and visible impact on a network's structure that, if present, it is hard to miss. It destroys the hierarchy of hubs characterizing the scale-free topology, turning it into a starlike network, with a single node grabbing all the links.

According to Ginestra Bianconi, one of Barabási's graduate students, the collapse of a network into a single dominate node can be modeling using the same mathematics that describes Bose-Einstein condensation, where the atoms in a gas settle into the same quantum state (see Bose-Einstein Condensation in Complex Networks by Ginestra Bianconi and Albert-László Barabási).

The example which Prof. Barabási gives in Linked for a network which has collapsed into dominance by a single node is the network of computers running a particular operating system. This network is dominated by Microsoft.

In the last few years experiments have shown that under the proper conditions, gases do actually collapse into a single quantum state. The idea that network collapse can be modeled in the same way is attractive. But is there any reason to believe that the Bose-Einstein model applies in this case? There was no evidence presented for this. In fact, there was no evidence presented that the dominance of Microsoft can actually be explained through a fitness model. Another model, proposed by Brian Arthur, is referred to as path dependence and is explicitly not based on fitness.

Conventional economic theory is built on the assumption of diminishing returns. Economic actions eventually engender a negative feedback that leads to a predictable equilibrium for prices and market shares. Negative feedback tends to stabilize the economy because any major changes will be offset by the very reactions they generate. The high oil prices of the 1970's encouraged energy conservation and increased oil exploration, precipitating a predictable drop in prices by 198x. According to conventional theory the equilibrium marks the "best" outcome possible under the circumstances: the most efficient use and allocation of resources.

Such an agreeable picture often does violence to reality. In many parts of the economy stabilizing forces appear not to operate. Instead, positive feedback magnifies the effect of small economic shifts; the economic models that describe such effects differ vastly from the conventional ones. Diminishing returns imply a single equilibrium point for the economy, but positive feedback.increasing returns.make for multiple equilibrium points. There is no guarantee that the particular economic outcome selected from among the many alternatives will be the "best" one. Furthermore, once chance economic forces select a particular path, it may become locked in regardless of the advantages of other paths. If one product or nation in a competitive marketplace gets ahead by "chance" it tends to stay ahead and even increase its lead. Predictable, shared markets are no longer guaranteed.

Quoted from Positive Feedbacks in the Economy by W. Brian Arthur, Published in Scientific American, 262, 92-99, Feb. 1990. This paper is also reprinted in Brian Arthur's book Increasing Returns and Path Dependence in the Economy, The University of Michigan Press, 1994

Microsoft became a dominate force in the computer industry because of the primitive operating system Microsoft supplied to IBM. IBM called this operating system PC-DOS. Microsoft retained the right to sell this software and called their version MS-DOS. At the time IBM adopted Microsoft's DOS operating system, there were other operating systems which had a significant user base (in particular, a primitive operating system called CP/M). By entering the market for personal computers, IBM created a hardware standard, which other companies copied. These other companies also licensed Microsoft's DOS.

DOS was not better than other existing software and it certainly did not represent the best that could be implemented on the hardware that was available at the time. However, because Microsoft's DOS existed on the most popular hardware platform, it gained a large user base. This in turn meant that application developers wrote software for DOS. This solidified DOS as a standard, as more and more applications became available.

Whether a network model is useful for explaining the domination of Microsoft in an economic network remains to be seen. Following the logic of path dependence, such a model would be based on a degenerate case of preferential attachment, rather than fitness. Here, the attachment of a new node depends heavily on the number of links an existing node has. As more links are added, this preference grows strongly, until almost all new nodes that are added to the network attach to a single hub.

To restate the history of DOS in the terms the degenerate preferential attachment network model: MS-DOS dominated the network of personal computer users because it quickly formed a large hub. The preferential attachment to this hub became so strong that virtually all users of IBM PC architecture systems used Microsoft's DOS.

In constructing a model the builder attempts to capture the important features, while leaving out detail that is not critical. For example, an economist building a model of a stock market will try to build a model with the important market features, without attempting to model the thousands of market participants. How successfully a model captures the behavior of a complex system can be judged by a number of factors including:

Statistical behavior.

Does the model show the same statistical behavior that is seen in the real world.

Predictive power.

Given a set of starting conditions, can the model predict the outcome in the real world, with some degree of accuracy.

Robustness

When new data is obtained, which was not used to develop the model, can the model still explain this data. For example, as we look at the real world in finer detail, does this detail correspond with the network model. The model must be true at more than one scale.

In modeling networks a variety of problems are encountered:

Networks are both an abstraction that can be useful in describing observations and an actual description of structure. For example, a network abstraction can be used to describe a set of enzyme interactions in yeast (see Lethality and centrality in protein networks, by Hawoong Jeong, Sean Mason, Albert-László Barabási and Zoltan N. Oltvai, Nature 411, 41-42 (2001)). While this may be a useful abstraction, there are other abstractions that can be used to describe chains of interdependent biological events. In contrast, the physical structure of the Internet is a network, composed of bridges at the local level and routers at higher levels. Abstractions are useful only in their power to illuminate. An abstraction that is used too broadly may suggest results that are incorrect.

When a network model is built, it must be shown that it is something more than a castle in the clouds. The model should display some of the characteristics of the larger system. For many complex biological systems and even for the Internet, the actual functioning of the system is not fully understood. Demonstrating that a model is valid may be difficult when a information about the real system is missing.

The model should display structure and statistical properties that have some similarity to the real world case that is being modeled.

In Hierarchical organization in complex networks (Erzsábet Ravasz and Albert-László Barabási, Sept. 1, 2002) the authors propose a recursive, fractal, algorithm for constructing networks. The networks constructed using this algorithm have links with a power law distribution and a network of any size is scale-free. While these are both properties that the author claim exist for many network "in the wild", it is very likely that networks "in the wild" do not grow in this manner. A more realistic network growth model would grow as real networks grow, the the addition of one node at a time.

In some cases there is an almost breathless quality in Linked, as Barabási's research group finds power laws where ever they look. For example, Barabási writes that his student, Réka Albert, found that the connectivity in a VLSI netlist for an IBM chip followed a power law:

Jay Brockman, a computer science professor at Notre Dame, gave us data on a man-made network, the wiring diagram of a computer chip manufactured by IBM. Before I left for Europe, my graduate student Réka Albert and I agreed that she would analyze these networks. On June 14, a week after my departure, I received a long e-mail from her detailing some ongoing activities. A the end of the message there was a sentence added like an afterthought: "I looked at the degree distribution too, and in almost all systems (IBM, actors, power grid) the tail of the distribution follows a power law."

Linked: the new science of networks by Albert-László Barabási, Perseus Publishing, 2002, Pg. 80

The VLSI netlist that connects the digital logic gates that make up an integrated circuit are the result of design. Chip designers follow a design methodology that results in a highly modular design hierarchy. It has been widely reported in the literature that connectivity in VLSI chips follows a power law. In the case of VLSI designs, the "hubs" of the design are the large modules which make up the internal architecture of the chip. Inside these modules the network that connects the logic elements does have a characteristic scale (e.g., a characteristic number of input and output links). Devices like cascade multipliers have a highly regular, crystalline, structure. The logic that implements an on-chip cache and the register file is equally regular in structure. Only when modules are viewed as large blocks does the power law connectivity emerge.

Focusing on this kind of low level detail may seem picky, but this example illustrates the problem of attempting to analyze the structure of very large networks. The statistical structure is relatively easy to discover (e.g., the link distribution follows a power law). This statistical result tells us nothing about the process that generated this distribution.

By focusing on the statistics without closely examining the structure that generated these statistics Barabási and his colleagues run the danger of overly broad generalizations and incorrect conclusions.

Networks and systems where the nodes have power law distributed connections can be resistant to random failure, but are vulnerable to carefully targeted attack.

The robustness of scale-free networks is rooted in their extremely inhomogeneous connectivity distribution: because the power-law distribution implies that the majority of nodes have only a few links, nodes with small connectivity will be selected with much higher probability. The removal of these "small" nodes does not alter the path structure of the remaining nodes, and thus has no impact on the overall network topology.

An informed agent that attempts to deliberately damage a network will not eliminate the nodes randomly, but will preferentially target the most connected nodes.

Error and attack tolerance of complex networks by Réka Albert, Hawoong Jeong and Albert-László Barabási, Nature, July 2000, Pg. 378

The idea that a network can be crippled by targeting the highly connected hubs is a something that we intuitively know:

The Italian Mafia was largely destroyed as a force in organized crime by sentencing the leaders (the highly connected hubs of the organization) to long jail terms. Obviously a campaign of jailing low level Mafia goons would not have had as powerful an effect on the organization.

Historically, in war, snipers preferentially target the hubs of the opposition (e.g., the officers).

Israel has targeted the leaders of organizations like Al Fateh and Hamas in an attempt to cripple these groups.

Campaigns against illegal narcotics, in theory, target large dealers and importers.

Although it is generally thought that attacks on networks with distributed resource management are less successful, our results indicate otherwise. The topological weaknesses of the current communication networks, rooted in their inhomogeneous connectivity distribution, seriously reduce their attack survivability. This could be exploited by those seeking to damage these systems.

Error and attack tolerance of complex networks by Réka Albert, Hawoong Jeong and Albert-László Barabási, Nature, July 2000, Pg. 378

Perhaps the first question to ask is: Does the link distribution of the routers that make up the Internet follow a power law suggesting a scale free network? Measurement of the actual structure of the Internet is not an easy task and is the subject of ongoing research (see Topology discovery by active probing Bradley Huffaker, Daniel Plummer, David Moore, and k claffy, June 2002). Earlier research, based on the border gateway protocol routing table that connect the sub-networks that make up the Internet does suggest that the Internet is a scale-free network. Visualizations of the Internet also suggest a hub based network, which gives rise to the power law distribution. For example:

Diagram of Internet connections, showing the major Metropolitan Area

Exchanges (MAE), by K.C. Claffy, republished on

Albert-László Barabási's Self-Organized Networks

Gallery web page.

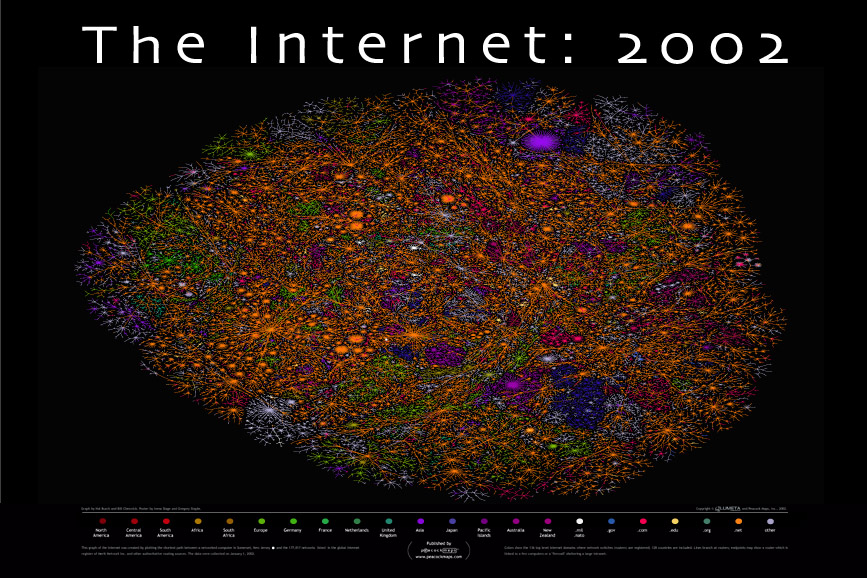

The Internet has become so large that visualization of its structure has become very difficult. Many of the visualizations of the Internet are both beautiful and very difficult to understand. For example, the image below is from a poster available from Peacock Maps

Copyright Peacock Maps, 2002, used with permission.

Prof. Barabási and his colleagues point out that hub based networks are vulnerable to attack. From this they conclude that the Internet, which is a hub based network, is also vulnerable to attack on a relatively small collection of hub node. This is a rather scary idea. The Internet is an increasingly important part of the world wide technology infrastructure. An attack that crippled the Internet could seriously do harm to the United States. At a time when there is a great deal of attention given to terrorist threats, a systemic vulnerability of this kind is not to be taken lightly. In a Scientific American article Prof. Barabási and his co-author Eric Bonabeau write:

The Achilles' heel of scale-free networks raises a compelling question: How many hubs are essential? Recent research suggests that, generally speaking, the simultaneous elimination of a few as 5 to 15 percent of all hubs can crash a system. For the Internet, our experiments imply that a highly coordinated attack -- first removing the largest hub, then the next largest, and so on -- could cause significant disruptions after the elimination of just several hubs. Therefore, protecting the hubs is perhaps the most effective way to avoid large-scale disruptions caused by malicious cyber-attacks.

Scale-Free Networks by Albert-László Barabási and Eric Bonabeau, Scientific American, May 2003

Is there actually any basis for the fear that the Internet could be disabled by attacking a set of highly connected hubs? Prof. Barabási and his research group have not shown that such a threat really exists. In fact, they seem to have largely ignored the actual physical structure of the Internet. In some cases Barabási does quote research papers on physical structure, but the relationship between bandwidth, network structure and robustness seems to have been lost on him. In earlier work, Barabási refers to experiments that used a web crawler, to follow web page links, gathering statistics on an abstract information network composed of web pages and their links. It was not always clear to me that Barabási clearly separated the structure of the information that is stored in the global network from the physical network structure. The physical network that we call the Internet is composed of computers, coaxial cable, fiber optic cable, network amplifiers, transceivers, bridges and routers. Any attack on the Internet would be aimed at the hardware and software that makes up this network.

In Scaling phenomena in the Internet: Critically examining criticality by Walter Willinger et al they do present measurements that suggest that the Internet is a scale free network, where the number of links N(k) is proportional to k-gamma, where gamma is about 2.1. Given that the Internet is hub based, will an attack on a relatively small number of hubs cripple the Internet as a whole? This depends on whether the hubs are actually responsible for important connectivity. As Prof. John Doyle, at the California Institute of Technology, points out, the Internet is backbone based:

The "hidden fragilities" that are claimed to be in the Internet are simply not there. There are no central, highly connected hubs. There is a simple reason for this: the high speed routers that make up the backbone of the network necessarily have low connectivity. This structure is driven by basic technological constraints: you can switch a few lines very fast or a lot of lines more slowly, but not a lot of lines very fast. The highly connected hubs exist at the periphery of the network to concentrate lower speed connections (for example, concentrators for dial-up or DSL lines).

Prof. John Doyle, Cal. Tech., personal communication, December 2002.

Although power laws do not seem to tell us much about Internet vulnerabilities, this does not mean that the Internet cannot be harmed by targeted attacks at a few selected points. If the Internet were visualized not by connectivity, but by bandwidth, the visualization might be expected to show a set of backbone links, which connect major population centers. Note that these backbones form fanout points, not hubs. In the United States the connecting points of the Internet backbone are referred to as the Metropolitan Area Exchanges (MAE) (see Simpson Garfinkel's 1996 account of a visit to MAE West, Where Streams Converge).

An event that harms these exchanges has the potential to harm the Internet as a whole. In this age of terrorist worries, there is a tendency to think that such harm might come from a terrorist event. In fact the greatest threat to the Internet in the year 2002 has been the era of corporate greed and fraud. MAE West and a significant fraction of the metropolitan infrastructure was operated by Metropolitan Fiber Systems, which was purchased by WorldCom Communications in 1996. In 2002 WorldCom declared bankruptcy as a result of vastly overstated profits. The ex-Chief Financial Officer of WorldCom, Scott Sullivan, is awaiting trial on criminal charges as I write this. When WorldCom declared bankruptcy there was serious concern that WorldCom might stop operating important parts of the Internet backbone. So far these fears have not materialized and WorldCom continues to operate portions of the Internet backbone while in bankruptcy proceedings.

Another well known Internet vulnerability involves the the root servers which provide Domain Name Service (DNS) support. Currently thirteen of these servers exist in several countries. In Took a Licking, Kept on Ticking, IEEE Spectrum, December 2002, Steven M. Cherry discusses the October 21, 2002 attack that managed to disable the majority of these root servers. An attack that entirely disabled the root servers would have harmed the ability of Web servers and other users of DNS to resolve addresses like bearcave.com to their underlying IP addresses.

Fort N.O.C.'s:The heart of Internet security lies in obscurity by Brock N. Meeks, January 20, 2004

This article discusses Brock Meeks visit to one of the thirteen Internet root servers in Virginia. The entry control security would keep out the average hacker, but it does not sound like it would stop an armed group. On the other hand, wiping out a single root server will not bring down the Internet, so perhaps such measures are not necessary.

In Linked Prof. Barabási discusses several examples of cascading failure. In Linked Barabási suggests that large scale cascading failure may be the result of failure spreading from highly connected hub to highly connected hub.

My reading on network theory, as it is discussed in Linked lead me to the papers by Carlson and Doyle on Highly Optimized Tolerance (HOT). This was a somewhat accidental journey, since those working on HOT theory sometimes strongly disagree with some of the claims made by the network theorists. Despite these disagreements, I believe that there may be a connection between the observations of HOT theory and network theory, although this connection is currently only speculative.

Like network theory, HOT theory has been developed in the last few years and is still a developing research area. HOT theory examines robustness and catastrophic failure in large complex systems. HOT applies to complex systems which show optimal behavior in some dimension. For example, one of the early abstract models of a HOT system involves a theoretical forest. Random "sparks", with a particular distribution, land in the forest and burn areas of trees that are interconnected. A forest manager can build fire breaks that separate one section of the forest from another, preventing the spread of a forest fire caused by a random spark. An optimized forest is one that preserves the number of trees, while minimizing the damage caused by forest fire. Using a variety of optimization algorithms, Carlson and Doyle found that the optimized abstract forest was robust for one distribution of sparks, but could catastrophically fragile in the case of another distribution. The losses in the forest fire model followed a power law.

The forest fire model is a toy model and it is not clear yet whether it relates to actual forests and forest fires. This work is suggestive for real worlds systems, however. Many systems exist in optimized states, either as a result of design or evolution. In the case of an evolved system, they resist failure in the environment in which the system evolved. Designed systems resist failure in the cases envisioned by the designers. Small events, which have never been encountered before or which the designers did not expect can cause catastrophic system failure.

Our design strategy was essentially predictive: based on extensive experience with such systems, we attempted to reason about the behavior of the software components, algorithms, protocols, and hardware elements of the system, as well as the workloads it would receive.

[...]In all cases, the anomalies arose because of an unforeseen perturbation to the system that resulted in the violation of one of our operating assumptions; the consequences of these violations were unusually severe.

Robustness in Complex Systems by Steven D. Gribble, Proceedings of the 8th Workshop on Hot Topics in Operating Systems

Large complex systems exhibit emergent behavior, which cannot be reproduced in a smaller, simpler system. This behavior is not predicted by the system designers and can only be understood my measurement and discovery. Seemingly small changes can cause large scale global effects. A butterfly flaps its wings and the system crashes.

The implications of complex systems, emergent phenomena and system fragility are usually looked at in relation to engineered systems, like large router networks (e.g., the Internet). By studying HOT and looking for models for complex behavior, we can hope to design better systems. Complex systems also exist in the society around us, particularly in the financial system.

The financial system in the modern world is also an optimized system that can be viewed as a strongly hub based network. The hubs consist of the large banks (e.g., CitiCorp, United Bank of Switzerland (UBS), Bank of America). These banks have been closely involved with the large market trading companies like Goldman Sachs. In many cases, the large banks have purchased trading companies. It is reasonable to speculate that the size of the large banks, like the distribution of wealth, follows Pareto's Law (which is a asintotic power law).

The financial system in the United States is a result of both design and evolution. Rules have been put in place which attempt to avoid serious failure. The system has evolved in the face of strong evolutionary forces, which exist because of the desire of wealth. The rules that govern the financial system are usually a response to historical events. One might expect that a system as large as the US financial system would only be vulnerable to large events (e.g., the demise of the Internet bubble). However, there is reason to believe that the US financial system, like any highly engineered optimized system, is prone to failure caused by small unexpected events. The most stark example of this was demonstrated by a hedge fund named Long Term Capital Management (see Inventing Money by Nicholas Dunbar, John Wiley and Sons, 2000).

At its peak, Long Term Capital Management (LTCM) had an investment base of $4 billion. This made LTCM a large hedge fund, but it was dwarfed by the large mutual funds. While a failure of LTCM could cause pain to those involved with the hedge fund, such a failure would not be expected to have any effect on the huge US financial markets. As it turned out, this expectation is wrong. LTCM differed from mutual funds and even other hedge funds by the size of the positions they took and the amount of leverage (credit) they used. By using large amounts of leverage, LTCM could take advantage of small changes in the market to make large amounts of money. For several years LTCM has a 40% return, after fees were paid to the fund managers. The other side of the profits that can be realized from leverage is risk and loss. LTCM controlled huge financial positions. When their investments went against LTCM, there was a danger that LTCM would default on their investment contracts. It was feared that an LTCM default would start a cascade of failure that could have engulfed the large investment firms and banks, which in turn could have caused serious economic damage. The United States Federal Reserve organized a bail-out of LTCM to avoid this.

In general the complex systems that make up the modern world are robust in the face of expected losses. That a relatively small investment fund could cause cascading failure in the US financial system was unexpected. There were no rules (design) in place to protect the system against this unforeseen failure.

Humans are building increasingly complex systems. Anyone who has been involved in the design, development, implementation and testing of a large complex system knows that these systems are fragile. Evidence is starting to appear that complex optimized systems, like large software codes, exhibit scale-free network structure (see Scale-free Networks from Optimal Design by S. Valverde, R. Ferrer Cancho and R.V. Sole). Network theory and the theory of highly optimized tolerance gives us a framework to think about large systems and their failure modes. This suggests two questions:

Are there lessons that can be applied to complex system design to produce systems which are more reliable?

Are there models that can help us understand the behavior of complex systems?

One of the lessons suggested by both Gribble and Newman et al is that systems should not be operated near their capacity limits. Systems that operate near their designed limits exhibit fragility and catastrophic failure from small events. A robust system would be designed with some excess capacity that would only be used sporadically.

The idea of designing a system with excess capacity is common in structural engineering. For example, bridges are commonly designed with excess capacity. While few people would suggest that bridges be designed to only support their expected maximum load, we tend to allow computer systems to operate near peak loads.

Excess capacity can be built into designed systems, but the issue is more complicated in evolved systems. The opportunity for financial gain which is aggressively persued by market participants may push markets into critical areas where they are vulnerable to failure as a result of small events. No clear understanding exists of where these limits exist or how to recognize them. Even if market limits could be defined and recognized, market participants would strongly resist anything that moved them away from these edges, since large profits (and losses) also exist at these extremes.

Part of the promise that the study of networks and highly optimized tolerance holds out is that useful models might be developed, beyond the intellectual frameworks that currently exist. This is a difficult task, since most of the systems that we want to model show complex emergent behavior that cannot be reproduced in smaller test systems. Modeling complex systems is a worth while goal, but there is reason to believe that success will exist in only carefully constrained areas.

Network theory and HOT theory show that many complex systems have similar characteristics. The successes and failures in modeling one complex system may apply to another. For a half century modern mathematics and statistics have been applied in an attempt to understand markets and economics. There have been some real successes (for example, in understanding stock portfolio construction and market options pricing). But models of the larger economy have not proven to be very useful. Although economics is a larger and more complex system than the systems we design, the limitations in economic modeling may also apply to large complex systems engineered systems.

These are bibliography web pages, with Web links to other references.

Self-Organized Networks (Barabási's group), University of Notre Dame Physics Department

International Network for Social Network Analysis

Lots of references to network applications in social science settings. Plus a rather Barabási style overheated headline Network research points to global AIDS solution. This is discussed in Linked. The proposal is to target the "hubs" of AIDS spread. These hubs consist of the individuals who have many sexual partners. By knocking out the hubs, it is reasoned, the spread of AIDS can be greatly slowed.

The Knowledge Discovery Laboratory, University of Massachusetts, Amherst, Department of Computer Science

This group is lead by Prof. David Jensen. Their focus seems to be on statistical (Bayesian) techniques to discover information in semantic graphs (networks, in the sense meant here). Prof. Jensen's group is also doing work on agent based systems and data mining.

According to the Associated Press article Pentagon continues some 'data mining': Controversial office eliminated, but not all research, February 23, 2004 (reprinted on MSNBC) some of this work was originally funded by the infamous Admiral John Poindexter (Ret.) at DARPA as part of the "Total Information Awareness" program. As far as I'm concerned this simply means that Adm. Poindexter funded some good programs, although, as his history demonstrates, he is tone deaf when it comes to political issues.

Mark Newman, Professor of Physics and Complex Systems, University of Michigan

Prof. Newman has published interesting work on both networks and robustness (making it difficult to know where to place him in this catagorization). Some of this work deals with the analysis of very large networks. Prof. Newman's web site includes web links to his papers.

Along with his contributions to network theory, Prof. Newman has published an interesting review article, The Structure and Function of Complex Networks

Robustness and the Internet, California Institute of Technology Network Laboratory

Robustness in Natural, Engineering, and Social Systems, Santa Fe Institute

John Doyle's web page at Cal. Tech.

This web page includes links to articles written or co-authored by John Doyle on Networks and Robustness.

Jean Carlson's web page at UC Santa Barbara

Prof. Jean Carlson has co-authored a number of papers with John Doyle on Networks and Robustness.

In some of the literature discussion power laws, there is a mention of Zipf's law. Zipf's law is an asintotic power law that describes a number of observations, the occupance of works in English and income distribution. Zipf's law is P(i) ~ i-a, where a is close to 1.0. A related power law is Pareto's law. For a good discussion of Zipf's law, Pareto's law and power laws see Zipf, Power-laws, and Pareto - a ranking tutorial, by Lada A. Adamic

Six Degrees of Lois Weisberg She's a grandmother, she lives in a big house in Chicago, and you've never heard of her. Does she run the world? by Malcolm Gladwell, The New Yorker, January 11, 1999

This article is on social networks. The article is from Gladwell's web site gladwell.com. I've read a number of his articles in the New Yorker. As an essayist, Gladwell is simply fantastic. Very smart and a very good writer. I also read his book The Tipping Point (reviewed here), which covers some network issues from a social context point of view. While this book was interesting, I found it dragged some.

Power Laws, Weblogs, and Inequality by Clay Shirky

Clay Shirky is difficult to classify. It would be fair to say that he is not a researcher and this is a more non-technical reference than most of those listed here. This web page describes an interesting example of the formation of hubs in "blog" hits. (A "blog" is a weblog, a sort of daily diary and free form essay that is updated every day. Blogs have become very popular, apparently with people who have too much time on their hand or egos that are even more out of control than mine)

Clay Shirky points out that "blog" hits form a power law, with a few "bloggers" getting the vast majority of hits. Shirky's results echo some of teh results reported in other papers citing power law structure on the Web.

The New Science of (Random) Networks by Rick Durrett, Notices of the American Mathematics Society, February 2004

This is a review of both Linked and Six Degrees: The Science of a Connected Age by Duncan J. Watts. The reviewer also notes Barabási's overheated and egotistical style. So I wonder if the title of the review is a play on another even more egotistical work, A New Kind of Science by Wolfram. Durrett writes:

As I was reading this book, I plotted a review filled with pointed remarks: "Barabási's book, like catastrophe theory, says nothing about everything, which will not please mathematicians, who are more inclined to knowing everything about nothing." "Mathematicians have much to learn from Barabási. We would generate many more sales with titles like Calculus: The Science of Motion. A theory that explains everything from the workings of nanotechnology to the motion of galaxies, from the flowing of blood in our hearts to the emotions that govern it."

Egos aside, both Barabási and Wolfram have important things to say and are worth reading.

The Tipping Point

by Malcolm Gladwell

Little, Brown and Co., 2000

This is my review of Malcolm Gladwell's book The Tipping Point, which discusses, among other things, social networks, including early work done my the experimental psychologist Stanley Milgram.

Scale-free Networks without Growth or Preferential Attachment: Good get Richer by Authors: G. Caldarelli, A. Capocci, P. De Los Rios, M.A. Munoz, October 28, 2002

Emergence of scaling in random networks by Albert-László Barabási, Réka Albert, Science 286, 509-512 (1999)

Hierarchical organization in complex networks by Erzsébet Ravasz, Albert-László Barabási, Physical Review E.

Modeling the Internet's Large-Scale Topology by Soon-Hyung Yook, Hawoong Jeong, and Albert-László Barabási, Proceedings of the Nat'l Academy of Sciences 99, 13382-13386 (2002)

Net Analysis Gets Turbo Boost by Michelle Delio, Wired News, August 21, 2003

This popular article describes some work done at Georgia Tech on rapidly modeling massive internet traffic.

Self-organizing networks and autonomous agents

Albert-László Barabási and his colleagues have modeled various ways that complex networks can form based on a set of mathematically describable rules. It may be possible to model the emergence of the rules described by Albert-László Barabási's equations using "autonomous agents", which have a set of behavior rules. Behavior rules may not be easily defined as equations, since agents can "remember" and "learn". These agents are, in turn, models for more complex autonomous agents, referred to as humans, which have assembled networks like the Internet, social networks, the spread of disease or of ideas.

A brief www.slashdot.org article (linked here) pointed me to Prof. Frank Schweitzer's group at the Humboldt University (Berlin) Instiut für Physik, which has done some work on autonomous agents and self-organizing networks. This work is summarized in a Technology Research New article:

Network builds itself from scratch by Kimberly Patch, Technology Research News March 26/April 2, 2003

Some of the papers by Prof. Schweitzer's on agents and self-organizing networks can be found on his Active Particles / Agent Models web page. This includes the recent paper

Self-Assembling of Networks in an Agent-Based Model by Frank Schweitzer and Benno Tilch Physical Review E Vol. 66 (April, 2002) 026113 (1-9)

WebGraph Department of Science and Information, Universita degli Studi di Milano

Studying very powerlaw graphs (or networks) requires some method for optimizing storage, since the data sets are so large. WebGraph seems to provide such a tool, at least for web pages and hyper-links.

From the abstract of the paper The WebGraph framework I: Compression techniques by Paolo Boldi and Sebastiano Vigna, Technical Report 293-03, Universitartimento di Scienze dell'Informazione, 2003:

Studying web graphs is often difficult due to their large size. Recently, several proposals have been published about various techniques that allow to store [sic] a web graph in memory in a limited space, exploiting the inner redundancies of the web. The WebGraph framework is a suite of codes, algorithms and tools that aims at making it easy to manipulate large web graphs. This papers presents the compression techniques used in WebGraph, which are centred around referentiation and intervalisation (which in turn are dual to each other). WebGraph can compress the WebBase graph (118 Mnodes, 1 Glinks) in as little as 3.08 bits per link, and its transposed version in as little as 2.89 bits per link.

You are who you know, Andrew Leonard, June 15, 2004, Salon

This Salon article describes a small fad that has emerged afor social networking web sites. These web sites allow users to build networks of friends, acquaintances and those who have similar interests. The Ur-web site for this was Friendster. Other social networking sites have spun off of this.

There is an obvious relationship between network theory and the the so called "page rank" algorithms used by search engines.

Patterns in Unstructured Data Discovery, Aggregation, and Visualization by Clara Yu, John Cuadrado, Maciej Ceglowski, and J. Scott Payne

A number of people have suggested a relation between market characteristics and power laws. For example, see Why Stock Markets Crash: Critical Events in Complex Financial Systems by Didier Sornette, Princeton Univ Press, 2002.

Power laws, networks and finance are a growing topic in the research literature as well. Here are a few references from Prof. H. Eugene Stanley's web pages at Boston University.

A Theory of Power-Law Distributions in Financial Market Fluctuations (pdf) by X. Gabaix, P. Gopikrishnan, V. Plerou, and H. E. Stanley, Nature 423, 267-270 (2003)

Multifractal Properties of Price Fluctuations of Stocks and Commodities by K. Matia, Y. Ashkenazy, and H. E. Stanley, Europhys. Lett. 61, 422-428 (2003)

Self-Organized Complexity in Economics and Finance (pdf) by H. E. Stanley, L. A. N. Amaral, S. V. Buldyrev, P. Gopikrishnan, V. Plerou, and M. A. Salinger, Proc. Natl. Acad. Sci. 99-Supp, 2561-2565 (2002)

How a butterfly's wing can bring down Goliath Chaos theories calculate the vulnerability of megasystems by Keay Davidson, San Francisco Chronicle, August 15, 2003

This article briefly discusses the massive power grid failure in the Eastern United States and Canada in terms of highly optimized systems and edge of chaos behavoir.

While this is a non-technical article by a newpaper science writer, I think that the issues it raises may turn out to be true. As power companies have been deregulated they have concentrated on optimizing their profits. This may have resulted in a power grid that operates in an "optimal fashion", but without much margine for error. Systems that operate near their limits may also operate near a boundary where chaotic behavior exists on the other side.

Too Darn Hot, by Philip Ball, Nature, March 17, 2000

Optimal design, robustness, and risk aversion by M.E.J. Newman, Michelle Girvan, and J. Doyne Farmer, 2002 (PDF format). This paper was published in Phys. Rev. Lett. Vol. 89, 028301 (2002)

For historical reasons, I periodically take a look at Doyne Farmer's web page at the Santa Fe Institute to see what he is working on. For me this was the ur-paper on HOT theory. This paper lead me to other papers, which resulted in the discussion on this web page.

COLD safter than HOT, by Philip Ball, Nature, February 27, 2002

This is a brief discussion of the Paper by Newman et al, above.

Robustness and the Internet: Design and evolution by Walter Willinger and John Doyle, March 1, 2002

Highly Optimixed tolerance: Robustness and Design in Complex Systems by J.M. Carlson and John Doyle, Phys. Rev. Letters, Vol. 84, No. 11, Pgs. 2529-2532, March 13, 2000

Power Laws, Highly Optimized Tolerance, and Generalized Source Coding by John Doyle and J.M. Carlson, Phys. Rev. Letters, Vol. 84, No. 24, Pgs. 5656-5659, June 12, 2000

Self-organized criticality in forest-landscape evolution by J.C. Sprott, Janine Bolliger and David J. Mladenoff, Physics Letters A 297 (2002) pgs. 267-271, May 13, 2002

Doyle and Carlson discuss forest fire models and optimized tolerance. This paper by Sprott et al is another discussion on forest evolution and robustness. What is interesting is that I did not notice any references by Sprott et al to the work of Doyle and Carlson.

Robustness in Complex Systems by Steven D. Gribble, Proceedings of the 8th Workshop on Hot Topics in Operating Systems

This is an excellent and rather humbling paper that describes serious failure from relatively small, unexpected errors in a carefully designed distributed storage system

Software, Scale-free Networks and Optimal Design

Scale-free Networks from Optimal Design by S. Valverde, R. Ferrer Cancho and R.V. Sole, Europhysisc Letters, April 16, 2002

Scale-Free Networks from Optimal Design: Additional material by S. Valverde, R. Ferrer-Cancho and R. V. Sole

Sergi Valverde Castillo's Web Page. This web page provides an interesting description of Sergi Castillo's work on software as a scale free network. There are some lovely pictures as well (always important in Network research).

This is a software tool that claims to have transformed the ideas behind scale-free networks and "small worlds" into "a highly innovative software engineering tool". In a rather high level white paper, this company claims that:

Healthy large-scale software is distinguished by low average component dependency (2.5 . 3.5 components) and small average impact of change (1-5%). On the contrary, most poorly written, entangled systems have low stability and low adaptability. Any small changes tend to ripple through the entire structure, bringing avalanches of bugs. Bad software is also difficult to adapt, because it lacks necessary abstractions (insulators, Martin2000) and wires implementations directly to other implementations.

Ideally software should be designed to minimize the impact of changes. If software has a high degree of interconnections (dependencies) changes will ripple through the program. The desire to minimize interdependencies runs counter to the desire to reuse software. For example, classic UNIX software would show heavy reliance on the UNIX standard library. Software reuse means that the interdependence metric is not as simple as this company suggests.

TouchGraph is an impressive open source software package for displaying graphs, ontologies and networks. TouchGraph is written in Java. The TouchGraph Web site states:

TouchGraph provides a hands-on way to visualize networks of interrelated information. Networks are rendered as interactive graphs, which lend themselves to a variety of transformations. By engaging their visual image, a user is able to navigate through large networks, and to explore different ways of arranging the network's components on screen.

Visually navigating through a network is inherently a dynamic process, and steps need to be taken to keep the user feeling oriented and in control. TouchGraph achieves this by keeping the graph looking as static as possible, and more importantly, by making sure that dynamic changes are predicable, repeatable, and undoable. The associative nature of a network makes remembering its structure surprisingly easy, but it is the experience of seeing a series of recurring stable visual images that really gives a boost to the user's memory. The ability to create and navigate these stable images is what makes TouchGraph special, and is also the key to empowering both the designer and the user.

There are many innovations to be made before the evolution of the graph-based interface is complete. TouchGraph LLC seeks to be at the forefront of these developments, and anticipates a time when associative networks, and the graph-based interfaces used to navigate them are omnipresent.

Graph Visualization Tools from the University of Michigan "Warriors of the Net" group.

This web page includes likes to 3D and 2D graph viewers. The software

is from various sources, including caida.org (the Cooperative Association

for Internet Data Analysis) and Tamara Munzner, at Stanford (e.g., her

LEDA: Library of Efficient Data Types and Algorithms.

LEDA is a library of C++ graph data structures and algorithms. These algorithms concentrate on classical graph algorithms. LEDA is described in an excellent book, LEDA: A Platform for Combinatorial and Geometrical Computing by Mehlhorn and Näher, Cambridge University Press, 1999.

LEDA has been commericalized by a German company Algorithmic Solutions Software. In addition to LEDA they sell other graph and network software.

AT&T Labs Graphviz: Open source graph visualization software

Graphviz apparently evolved from the old troff era dot software. Graphviz is considerably more powerful than the old dot program and has been used to display very large graphs like those involved in VLSI designs and software. Graphviz runs on both Windoz and UNIX.

aiSee graph visualization software

This is a commerical product which runs on a variety of platforms. It is free for non-commercial use. A posting in comp.compiles (by someone from AbsInt, the company that developed aiSee) describes aiSee as follows:

aiSee was developed to visualize the internal data structures typically found in compilers. Today it is widely used in many different areas:

- Database management (entity relationship diagrams)

- Genealogy (family trees)

- Business management (organization charts)

- Software development (control flow graphs, PERT charts)

- Hardware design (circuit diagrams, networks)

aiSee reads a textual, easy-to-read and easy-to-learn graph specification and automatically calculates a customizable graph layout. This layout is then displayed, and can be interactively explored, printed, or exported to various formats.

aiSee has been optimized to handle huge graphs automatically generated by other applications (e.g. compilers). It is available for Windows, various Unix dialects, and Mac OS X/X11.

Ian Kaplan

December 2002

Last updated on: June 2004